An Introduction to Apache Airflow

Summary

On this first video of a series, I will show you some basic concepts of Apache Airflow. No programming code will be shown this time. In case you prefer it in a video, just jump to youtube using the following thumbnail:

or this link: https://youtu.be/zQySzUlI2O8

We will be discussing following topics:

- What is Airflow

- When to use it

- When not to use it

- Task

- Dag

- Bash Operator

- XCom

- Python Operator

- Global Variables

- Cross Dag Variable passing

What is actually Apache Airflow?

It's a scheduling service, allowing you to schedule your jobs. It is written in python. Originally it was developed by airbnb in 2014. Now it belongs to the Apache Foundation.

When you want to use it?

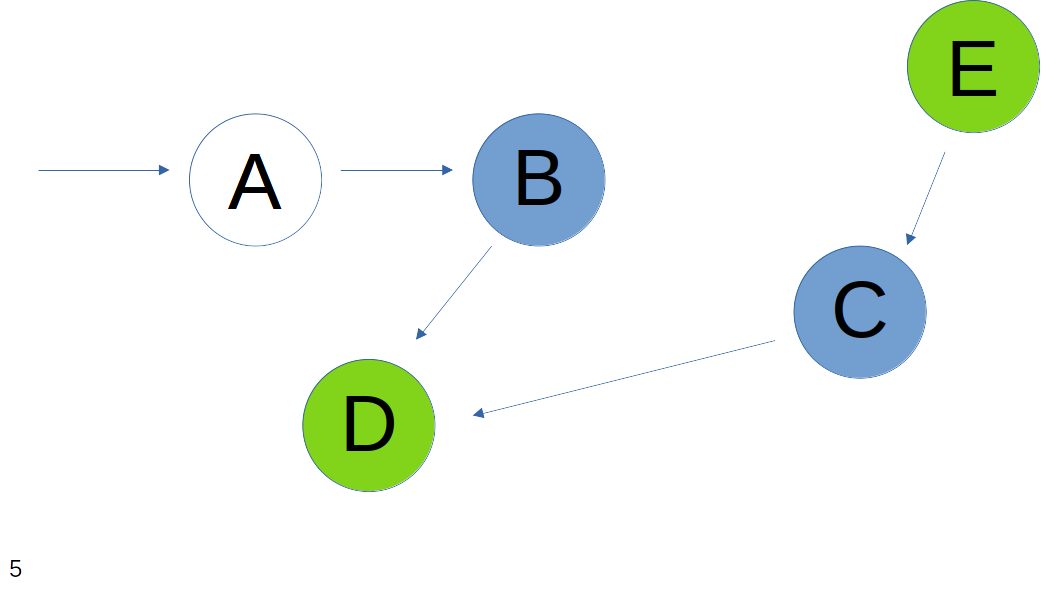

Imagine you have this kind of dependencies of your jobs. To execute "D" you have to wait on "C" and "B". To execute both of them you have to execute "A" and "E" before. But "A" and "E" can be executed in parallel, as this dependencies are known to Apache Airflow. "B" and "C" can start directly after their parent job has finished and only D have to wait until both previous jobs have finished. Airflow allows you to manage this kind of dependencies quite easily using python code.

When you don't want to use it?

When you have something similar in your stack - for example AWS provides "Step Functions". But if you don't have the possibility to use AWS or you want to use your on-premise installation you can use Apache Airflow. Also when you have no branching or just a sequential order of execution, Airflow may to to much for your needs - but you can still use it. If you don't like python, Apache Airflow will not be a good choice for you either.

Task

A Task in the terminology of Apache Airflow is what I previously called "job" that you can run by itself. You can restart it and look at the logs using the Apache Airflow UI. We will have a look at the Apache Airflow UI in a next blog post.

Dag

A Dag is a container a collection of tasks with their dependencies to each other. You could call Apache Airflow an application for (managing) a collection of dags.

Scheduling

Running jobs regularly can not be done using a cyclic dag - that's not allowed in Apache Airflow.

To have this kind of functionality, you can use crontab style definition in your dag to have it scheduled appropriately, for example at 9:00am UTC. This will remove the cyclic dependency for a lot of cases.

Bash Operator

The first and the most simple one is the "Bash Operator". It allows you to execute any bash command.

Lets give an example: Delete the last file in the current directory. First, we need to create a new file for testing. Then we use ls for listing the directory content then we want to get the last element from. With tail -n 1,we only get the last element. And finally the xargs call for actually removing the element. You can combine all of this commands using Pipes:

ls | tail -n 1 | xargs -i bash -c 'rm {}'

Of course you can put all in one bash operator, it will work fine. But it will not give you (every) benefit of using Apache Airflow.

Instead, what I would suggest, moving each of this calls into separate "Task"s. The first one is ls than the tail and then the rm. This allows you, when one of this task crash:

- Look into the logs what happened

- restarting only the failed part

- better debugging while developing new dags

- prevent computational expensive tasks from rerunning when not really required

XCom

When you have to do some cross-Task communication like in the provided example above, their is a build in mechanism called "XCom" - short form for "cross communication". With it, you will just have to write jinja templates, where you are pushing and pulling from. The data management itself is managed by airflow.

Python Operator

It allows you to execute arbitrary python code directly out of airflow. Note that your environment variables may get cleaned, so you need to add them to .bashrc or similar file.

Global Variables

You can read and write global variables while execute a task. But you should not use them for any state management.

Everything that is related to a state, should be handled in the dag itself. Otherwise you loose the restarting possibility. We use global variables in my current company to store credentials to third party servers that don't change frequently and should not influence the execution of a task (until the credentials are used).

Cross Communication via Dags

When you have a huge complex pipeline, you may consider splitting it into several dags. In Apache Airflow you can not pass data between dags. But you can execute a dag from the airflow CLI with a json payload. Combining this with a Bash Operator and a little bit of code, you can pass data beween dags with no extra library. I will do an entire blogpost and video how to do it, when you are interested in the details.

In the next Post I will show you the Airflow UI, to not extend the amount of text in one post :-).

Thanks for reading and see you soon.